PureGen: Universal Data Purification for Train-Time Poison Defense via Generative Model Dynamics

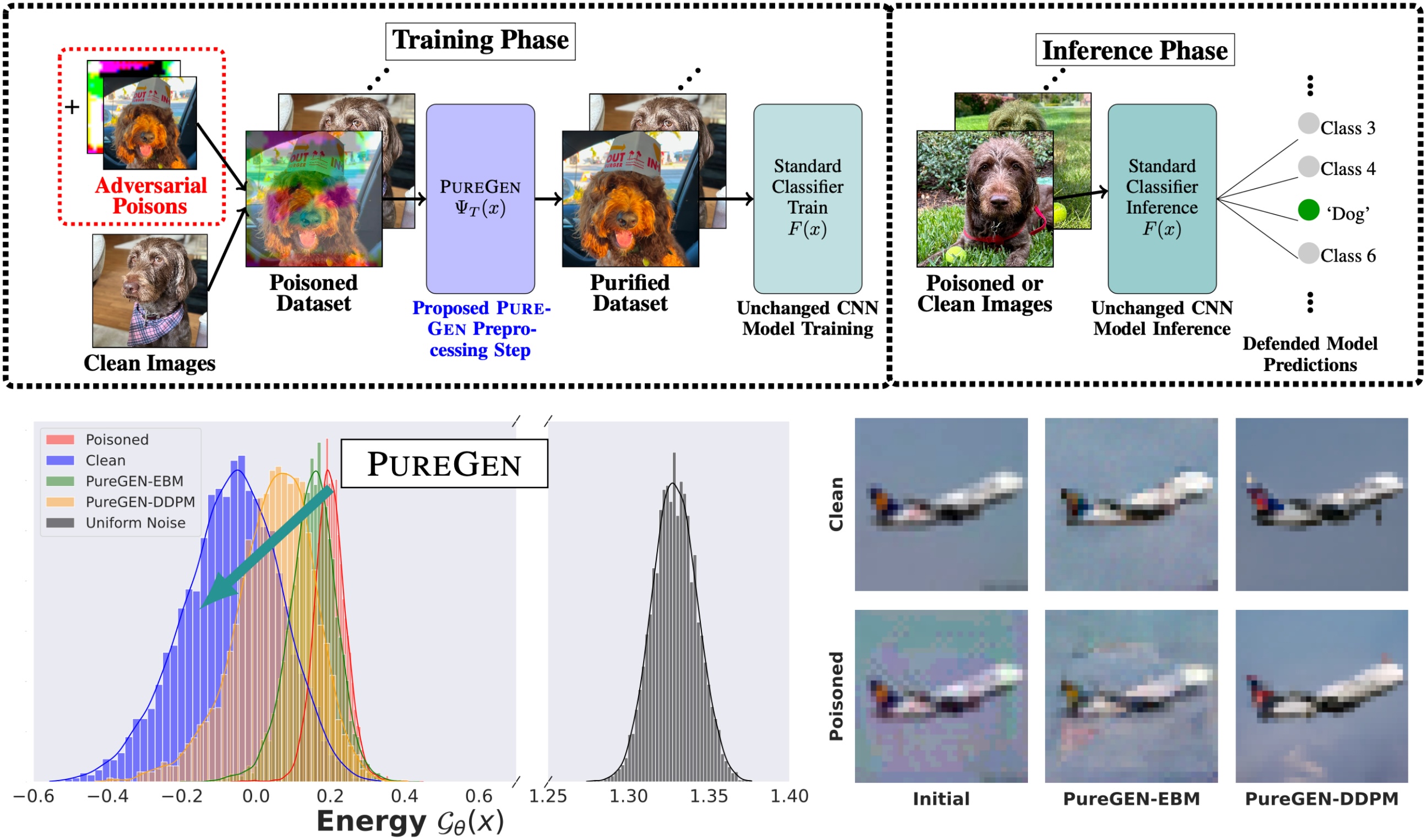

Train-time data poisoning attacks compromise machine learning models by introducing adversarial examples during training, causing misclassification. We propose universal data purification methods using a stochastic transform, Ψ(x), implemented via iterative Langevin dynamics of Energy-Based Models (EBMs) and Denoising Diffusion Probabilistic Models (DDPMs). Our approach purifies poisoned data with minimal impact on classifier generalization achieve State-of-the-Art defense performance without requiring specific knowledge of the attack or classifier.

Paper Github Medium Blog